How GrowthMentor is Using Generative AI for Marketplace Matching

Every marketplace faces the difficult task of efficiently connecting supply with demand.

Each user is different and has unique needs, preferences, and nuances, seeking a specific ‘something’ within a product – and they need to find it quickly. The point? Fast product discovery, helping the user find what they’re after quickly, guiding them to their “aha” moment in the shortest possible time frame.

But with so much variety in marketplace offerings, effective product discovery can be a tough challenge.

A matching algorithm or recommendation engine is a good solution, making it easier to connect users with the right products. But traditionally, this was out of reach for most startups who couldn’t afford to hire an entire team of machine learning engineers.

Enter the era of large language models and generative AI. This technology has made it so that it’s faster and simpler than ever to build recommendation algorithms that can meet users’ unique needs in a personalized, efficient way.

And this is exactly what we did for GrowthMentor.

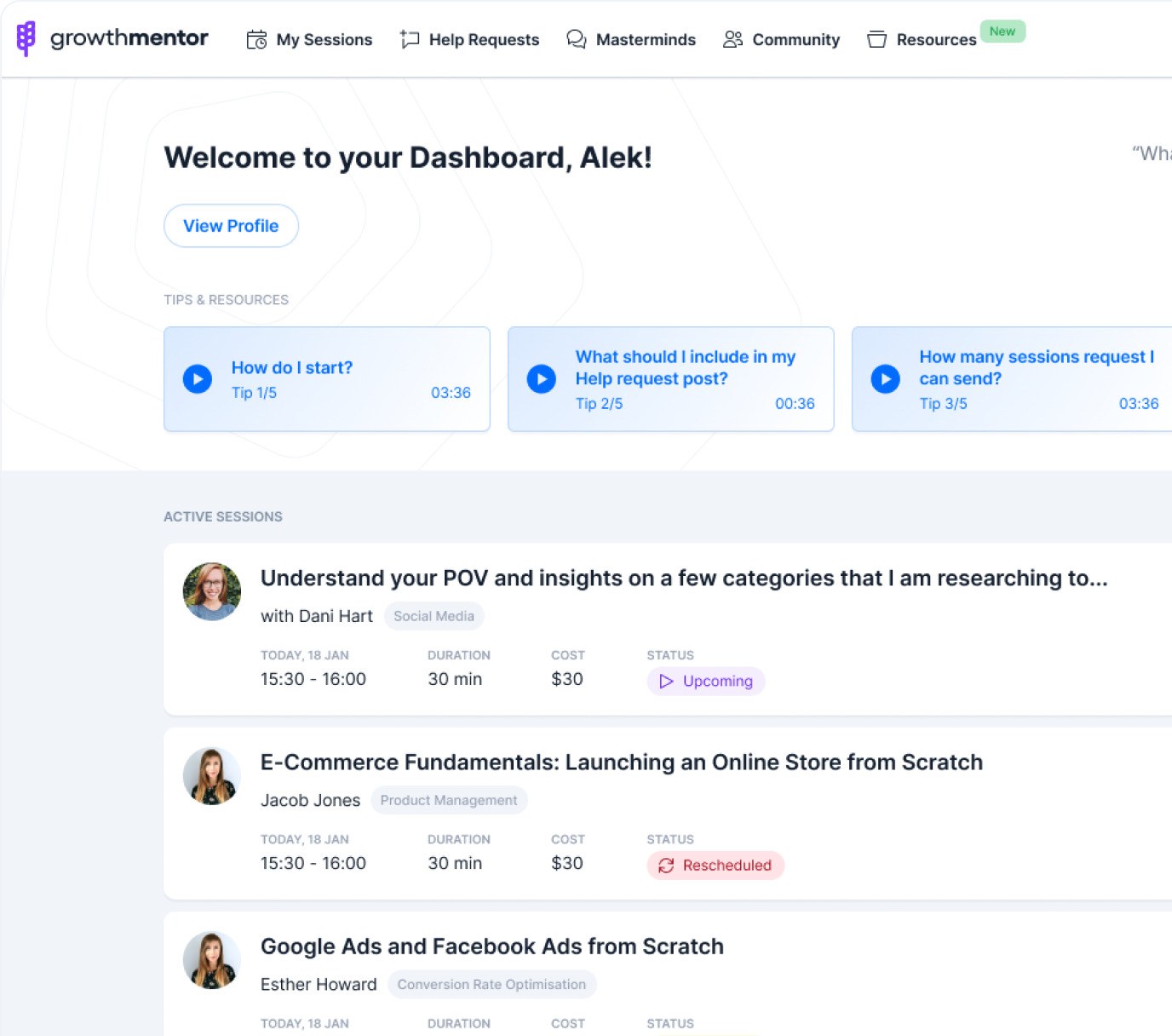

GrowthMentor is a platform that offers 1:1 mentorship for startups. With hundreds of mentors to choose from, all with very detailed profiles, it could be overwhelming for the mentee to find the right fit for them quickly, slowing down product discovery and creating inefficiencies in the marketplace.

The GrowthMentor team typically managed this by getting involved and speaking to each mentee and providing personalized suggestions based on the mentors they knew – but at scale, this isn’t manageable.

On-demand matching using Generative AI

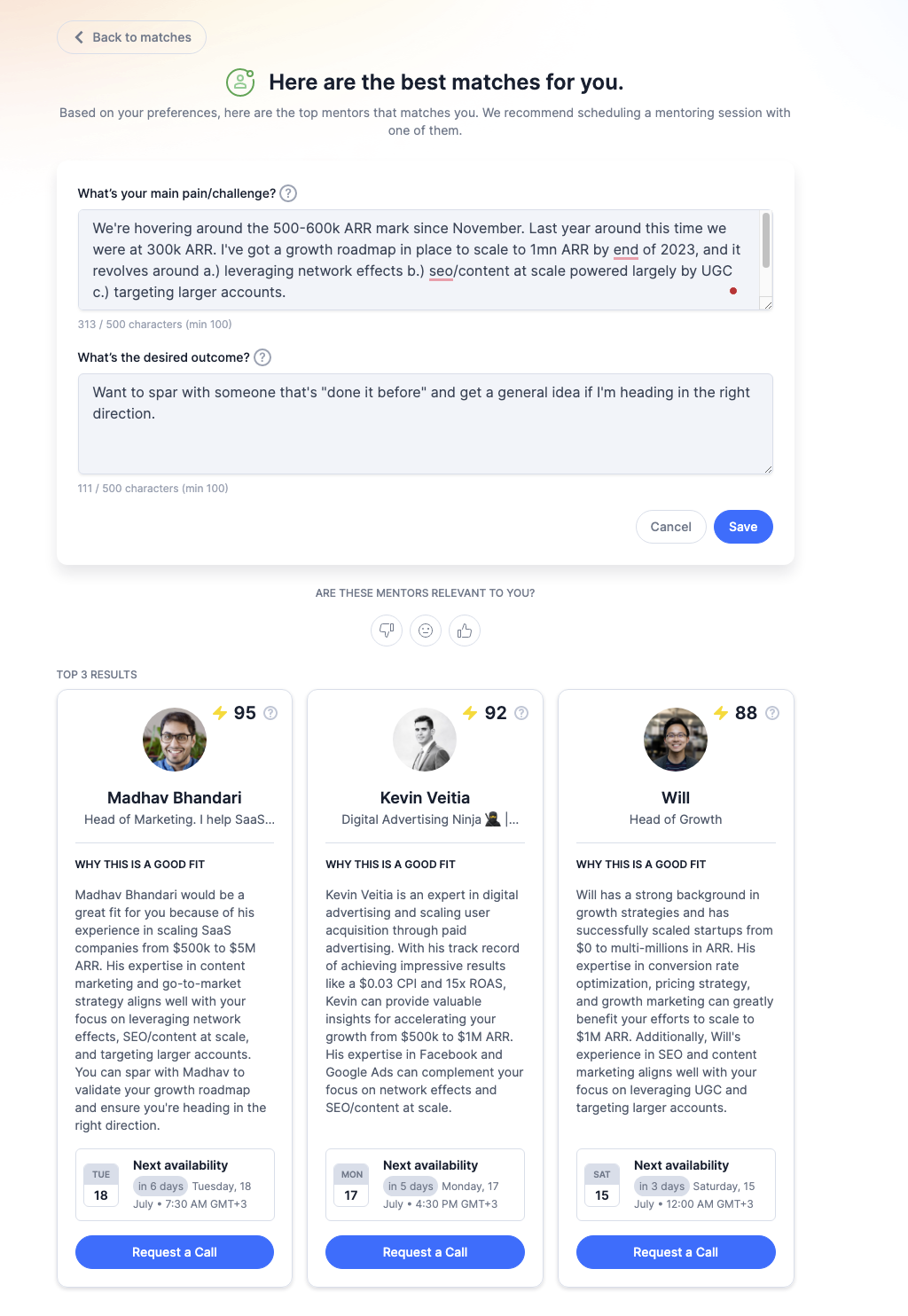

The ask: Help mentees find the right Mentor for them quickly.

The task: On-demand matching – reduce the search friction in finding mentors by providing personalized mentor suggestions to mentees based on their challenges.

The goal: Improve acquisition and activation by reducing time-to-value for users, and reinforcing product retention and engagement on the platform.

How it works

Step 1: Embeddings

A mentee types in two fields, their challenges, and desired outcomes. Based on this information and data from Mentors (including areas of expertise, background and experience, reviews, etc.) we create vectorized embeddings.

In simple terms, this is a method to quantify and represent textual information. Think of it like positioning words and sentences as points within a multi-dimensional space. The closer these points are to each other, the more similar their meanings.

To help visualize this, imagine we have three mentors and one mentee. We want to determine which mentor is the most compatible match for the mentee.

Mentee

| \

| \

| \

| \

| Mentor 1

| \

| \

| \

| Mentor 2

| \

| \

| Mentor 3

In this multi-dimensional space, each ‘Mentor’ is a point and the ‘Mentee’ is another point. The lines connecting the ‘Mentee’ to each ‘Mentor’ represent the “distance” or degree of difference between them. The proximity of the Mentor to the Mentee signifies the compatibility between them, with closer points indicating more similar attributes such as interests, areas of expertise, and so on.

To compute this “distance”, we use a method known as cosine similarity. It enables us to measure how similar a mentor’s profile (now vectorized and represented as a point in space) is to the mentee’s needs and preferences.

By comparing all mentors to the mentee in this way, we can rank the mentors based on their match compatibility with the mentee’s needs.

Why use vectorized embeddings?

They offer us several advantages:

- Contextual Understanding: Traditional methods like keyword matching can often feel like trying to find a needle in a haystack. They focus on specific words or phrases but lack the finesse to understand the overall context or sentiment. This could lead to matches that technically fit the search criteria but miss the point in practice. Vectorized embeddings flip this scenario. Instead of looking for a specific needle, they map out the entire haystack, understanding the bigger picture. They go beyond just matching keywords and dive into the contextual meaning of the text.

- Scalability: As GrowthMentor continues to grow and our mentor database expands, scalability becomes crucial. Vectorized embeddings shine in this area as they efficiently manage large volumes of data. The approach not only handles current data but is built to accommodate future growth seamlessly.

- Versatility: The beauty of vectorized embeddings lies in their adaptability. This technique isn’t restricted to specific types of text data; it can be applied to bios, reviews, feedback, or even conversation transcripts. This versatility provides consistency and reusability, making our matching system more robust and comprehensive. It also allows us to continuously refine and improve the matching process, leveraging all types of mentor data to find the best fit for our mentees.

In essence, vectorized embeddings are the backbone of our advanced matching system, giving us the context, scalability, and versatility to provide a superior mentor-mentee matching experience.

Step 2: Generative AI integration

Once we’ve identified our top three matches based on vectorized embeddings, we invite a generative AI model (like GPT-4) to join the party and bring matchmaking to the next level.

GPT-4, a sophisticated language model, has been trained on a diverse range of internet text. But, more than just understanding the text, it is built to predict what comes next. This predictive capability isn’t just about guessing the next word in a sentence; it can anticipate and generate whole sentences or paragraphs that follow a given prompt. This understanding of context and ability to generate relevant, natural-sounding text gives GPT-4 an edge in fine-tuning our mentor matches.

In action, GPT-4 sorts through the initial matches and applies its understanding of context and language to reassess the suitability of each mentor. It might pick up on subtleties that the vectorized embeddings might have missed, re-ranking the mentors to ensure that the best fit for the mentee’s unique needs and challenges is highlighted.

GPT-4’s job doesn’t end at just re-ranking. The model uses its contextual understanding and text generation abilities to create a personalized text snippet for each mentee, explaining why a particular mentor is a great match. This step really brings out the power of GPT-4 as it can synthesize a lot of information and present it in a digestible and meaningful way. For instance, it can explain why a mentor, who is an expert in SaaS marketing, is a good match for a mentee looking to scale their SaaS startup.

We optimize this process through ‘prompt engineering’ – a method to guide an AI model’s response in a certain direction. Basically, we’re shaping the AI’s ‘thought process’ through specific instructions, examples, and context. We adopt different techniques – such as Zero-shot prompting and Few-shot prompting, to optimize responses, ensure they are tailored to the individual, and limit model hallucinations.

Here’s a simple example to illustrate:

Basic prompt: “Why is Mentor A a good match for the mentee?”

GPT-4 output: “Mentor A matches the mentee’s needs.”

Engineered prompt: “Given that the mentee needs help in scaling their e-commerce business, why would Mentor A, an experienced digital marketing strategist and e-commerce specialist, be a good match?”

GPT-4 output: “Given the mentee’s need for e-commerce growth, Mentor A is an ideal match. With extensive experience in digital marketing and a proven track record in e-commerce, Mentor A can provide valuable insights and actionable strategies to boost the mentee’s online sales. Their expertise in digital marketing could help the mentee optimize their advertising strategy and enhance their online visibility, potentially leading to increased customer acquisition and business growth.”

Wrap-up

By leveraging vectorized embeddings and generative AI’s natural language processing, we’ve created an intelligent matching algorithm for GrowthMentor. This synthesis of technology and human experience has resulted in more accurate, personalized, and meaningful mentor matches. In fact, mentees who use the matching feature are engaging more than ever, doubling their session requests per week.

What does this mean for users? They are receiving matches that truly resonate with their unique challenges and desired outcomes. It’s a solution that cuts through the noise, offering users the ability to focus on what truly matters – growing, learning, and reaching their goals.

What does this mean for GrowthMentor? With users more engaged and requesting more sessions, we’re increasing the efficiency and effectiveness of the platform, enabling scale without compromising on the quality of matches and user satisfaction. We help users get to their “aha” moment faster, improving conversion rates, time-to-conversion, and ultimately, the company’s bottom line.

We’re continuously seeking to advance this feature within GrowthMentor, catering to an ever-growing community of dedicated mentees and mentors. And for other marketplaces and organizations looking to build scalable personalization into their tech stack, we’d love to chat.

Want to get mentored by Alex?