Practice Makes Perfect: How to Boost Your Prompt Engineering Skills

If you’ve been struggling to get the share-worthy results out of AI models like Chat-GPT and DALL-E, it’s time to ditch the trial-and-error and upgrade your prompt engineering skills.

Fortunately, Michael Taylor, O’Reilly Author of “Prompt Engineering for Generative AI”, and Alex Lambropoulos, founder of Measurely.io, have the tips and tricks you need to improve.

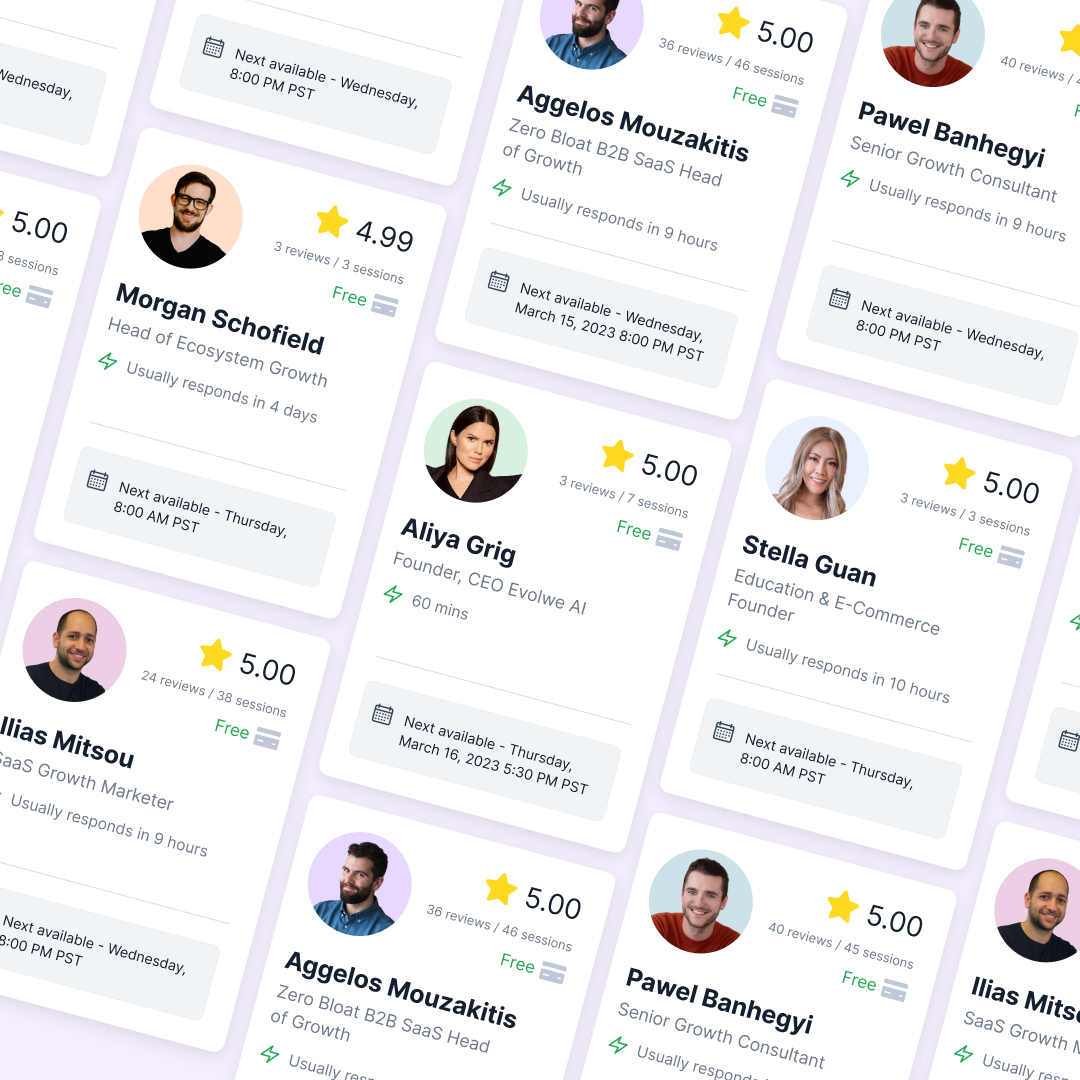

Your Featured Mentors

Growth Analytics for Customer Acquisition & Retention, Matching, and CRO

Prompt Engineer and O’Reilly Author of “Prompt Engineering for Generative AI”

Why is AI So Inconsistent?

After using AI a few times, you’re is bound to notice the results are anything but consistent.

So why? Are we doing something wrong in our prompts?

Well, it turns out that the results are inconsistent partially due to the nature of the machine.

However, Michael had a take that was far more intriguing. Essentially, we need to stop thinking of AI as working with a computer and more like working with a coworker:

Of course, like with trying how to do something to coworker, it’s often much harder when the task is more complex. Once again, AI is no different. Whenever you hand it a more complex task, it tends to hallucinate.

Even with the right prompts, however, sometimes the model you’re using may be more or less suited to a task. Generally, Alex and Michael recommended GPT for more analytical tasks like coding, whereas a model like Claude is more suited for creative tasks. Ultimately it’s a question of the quality you need versus the speed and cost.

But, you can navigate all of these pitfalls. It’s just a matter of knowing the right prompting principles.

7 Ways to Get More Consistent Results from AI

While we’ll cover plenty of tips and tricks for improving your prompts, we’ll start with the one thing that can improve your output the fastest: examples.

Michael broke it down here:

As Michael explained, there are a few ways to add examples to your prompts.

Zero Shot: No example is provided

One Shot: One example is provided

Multi- or Few-Shot: Multiple examples are provided.

For those of us working with the chat interface of Chat-GPT, one and multi-shot prompting is likely enough.

But for those building AI systems, they may want to consider using examples in conjunction with retrieval augmented generation or RAG. Here’s Michael’s explanation of RAG:

If you had a thousand examples to pick from, but you could only fit ten in the prompt Then you know you might want to just select the best ten out of those thousand. RAG is a technique for finding say the 10 best examples to put into the prompt automatically. It will go and search the database for 10 examples of that question being answered or similar questions to yours being answered and then it will return those answers. So then when you’re getting a response, finally, the response you’re getting is much better because you’ve given that 10 shot example, and those shots are all relevant to what you asked.

While examples will probably get you furthest in refining the model’s response, a few more techniques can advance your results even further.

Alex recommended using chain of thought prompting after examples. In this method, you ask the AI to explain its reasoning and refine that reasoning in each step. “Think about these elements and having it tell you that reasoning. As it’s generating the output, the model will perform a lot better because again, it’s a probabilistic model, right? So as it reasons, it becomes more accurate.”

Next, avoid using negative language. As Alex pointed out, “it doesn’t interpret not doing as well as it does doing.”

Along with this, avoid being imprecise in your instructions. Rather than prompt with “be short and don’t write too many sentences,” you should instead prompt with “Give me a maximum of 10 sentences.”

If this still isn’t getting the results you’d like to see, tell your AI assistant to adopt a role. This step helps narrow the AI’s knowledge base to roughly where it will find the necessary answers. Michael explains why it works here:

Also consider breaking complex prompts into a series of smaller steps. This reduces the amount of complexity that the model has to handle at any one given time, making hallucinations less likely

And finally, keep in mind that the context of your AI is limited, even when you’ve uploaded documents. Here’s Michael on those limits:

With those tactics under your belt, you should be well on your way to getting consistent results out of your prompts. But, a bit of experimentation can take your results even further.

Experimenting with Prompts

Getting your prompts right truly comes down to iterating. Michael described it as:

It reminds me a lot of growth hacking, actually, in that you have some hypothesis of what you think would work. You’re trying to kind of second guess what behavior would be or what responses would be to the words that you’re putting out there, whether it’s copy on landing pages or prompt in chat GBT. And then you’re A -B testing to see, OK, well, when I do it this way, what type of response do I get when I do it this way? What type?

In some cases, you may have to test different prompting methods across different models. For example, GPT tends to listen to instructions better at the beginning while Claude responds better if you put those instructions at the end.

Then, once you’ve figured out which model is the best for your purposes, you can test within the model. Alex explained his process for intra-model testing:

How to Practice Your Prompt Engineering Skills

Like any other skill, you can only improve your prompt engineering with practice. But with the myriad of use cases for AI, it can be overwhelming to figure out how to practice.

But Alex and Michael both gave similar advice: practice with tasks you perform on a regular basis.

For Alex, it comes down to automating tasks or helping you in your day-to-day:

“Ultimately, for most people, it should help them be more productive in any way, right? And just testing as much as you can, you know, and adjusting your prompts, starting a new chat window and trying again, doing that will get you into the rhythm of how it can help you and very quickly, you’ll kind of put pieces of the puzzle together.”

Michael did warn that testing on your day-to-day tasks may lead you to being “hard on the AI and generous to yourself,” so you underestimate what the AI is capable of. But, blind testing is a great way to keep that tendency in check. Here’s how Michael usually runs these tests:

“My favorite thing to do is to take a task that a human has done and then see if I can replicate that task with prompting and then do a blind test for one of my coworkers and say, okay, which one do you like? Which results do you like? Do you like A or B and not tell them which one is AI? And I would say I have like a 40 % success rate with that in terms of tricking people that they couldn’t guess which one was AI.”

The more you test, experiment, and practice with AI, the better your skills will become and the more consistent your results will get. In time, you may even be able to call yourself a prompt engineer.

For even more tips, watch Michael and Alex’s GrowthMentor LIVE, Up Your Prompt Engineering Game.

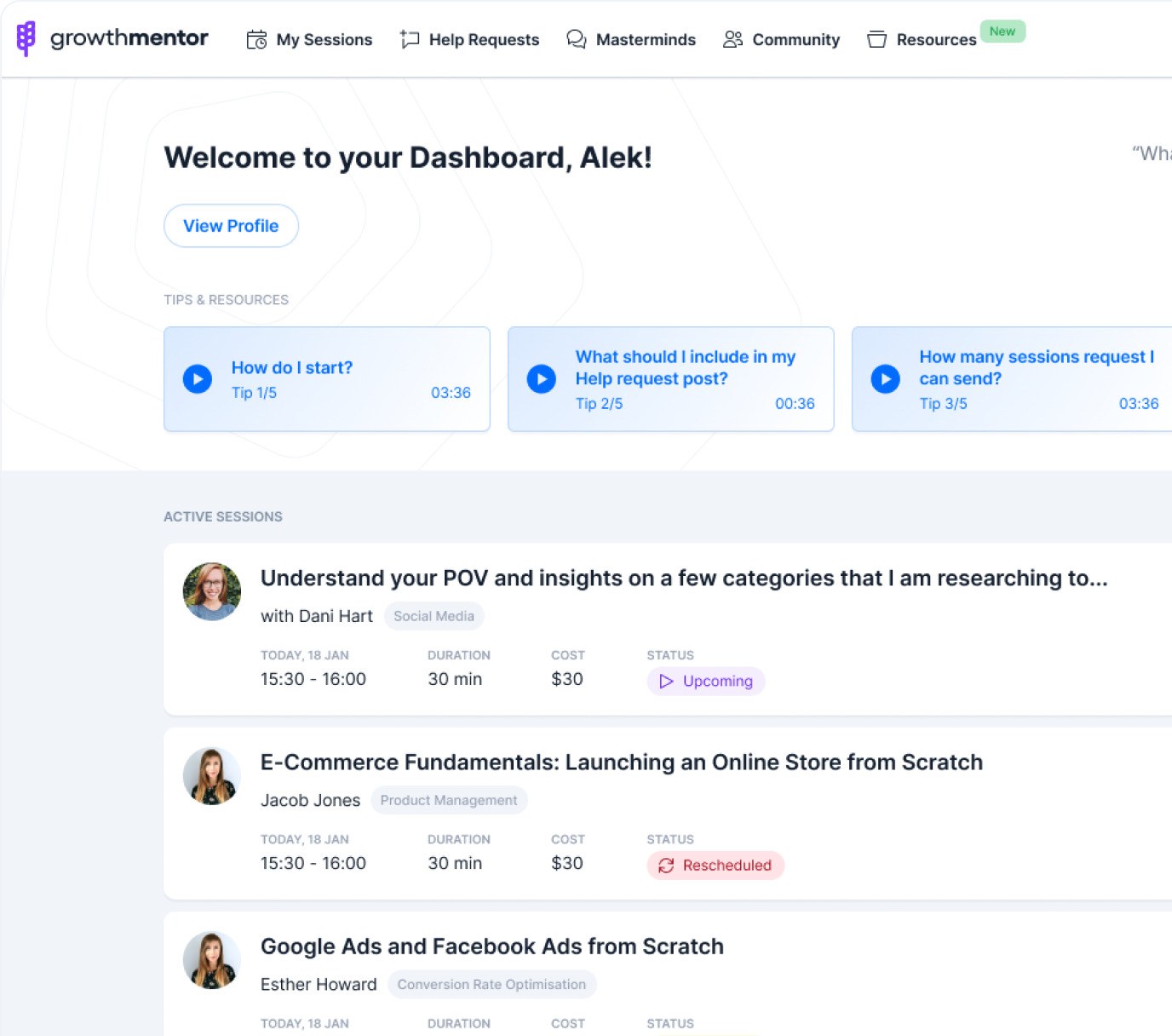

And if you want even more help in refining your skills, from Michael and Alex or dozens of other AI-expert mentors? Join GrowthMentor Pro.

Want to refine your prompt engineering skills?